Cisco Certification Exam Prep Materials

Cisco CCNA Exam Prep Material Download

Cisco CCT Exam Prep Material Download

- Cisco 010-151 Dumps PDF

- Cisco 100-490 Dumps PDF

- Cisco 100-890 Dumps PDF

- Tips: Beginning February 10, the CCT Certification 500-150 FLDTEC v1.0 exam will replace the 100-490, 010-151, and 100-890 exams.

Cisco CyberOps Exam Prep Material Download

Cisco DevNet Exam Prep Material Download

Cisco CCNP Exam Prep Material Download

- Cisco 300-410 Dumps PDF

- Cisco 300-415 Dumps PDF

- Cisco 300-420 Dumps PDF

- Cisco 300-425 Dumps PDF

- Cisco 300-430 Dumps PDF

- Cisco 300-435 Dumps PDF

- Cisco 300-440 Dumps PDF

- Cisco 300-510 Dumps PDF

- Cisco 300-515 Dumps PDF

- Cisco 300-535 Dumps PDF

- Cisco 300-610 Dumps PDF

- Cisco 300-615 Dumps PDF

- Cisco 300-620 Dumps PDF

- Cisco 300-630 Dumps PDF

- Cisco 300-635 Dumps PDF

- Cisco 300-710 Dumps PDF

- Cisco 300-715 Dumps PDF

- Cisco 300-720 Dumps PDF

- Cisco 300-725 Dumps PDF

- Cisco 300-730 Dumps PDF

- Cisco 300-735 Dumps PDF

- Cisco 300-810 Dumps PDF

- Cisco 300-815 Dumps PDF

- Cisco 300-820 Dumps PDF

- Cisco 300-835 Dumps PDF

Cisco CCIE Exam Prep Material Download

- Cisco 350-401 Dumps PDF

- Cisco 350-501 Dumps PDF

- Cisco 350-601 Dumps PDF

- Cisco 350-701 Dumps PDF

- Cisco 350-801 Dumps PDF

Cisco CCDE Exam Prep Material Download

Cisco Other Exam Prep Material Download

- Cisco 500-052 Dumps PDF

- Cisco 500-210 Dumps PDF

- Cisco 500-220 Dumps PDF

- Cisco 500-420 Dumps PDF

- Cisco 500-442 Dumps PDF

- Cisco 500-444 Dumps PDF

- Cisco 500-470 Dumps PDF

- Cisco 500-490 Dumps PDF

- Cisco 500-560 Dumps PDF

- Cisco 500-710 Dumps PDF

- Cisco 700-150 Dumps PDF

- Cisco 700-750 Dumps PDF

- Cisco 700-760 Dumps PDF

- Cisco 700-765 Dumps PDF

- Cisco 700-805 Dumps PDF

- Cisco 700-821 Dumps PDF

- Cisco 700-826 Dumps PDF

- Cisco 700-846 Dumps PDF

- Cisco 700-905 Dumps PDF

- Cisco 820-605 Dumps PDF

Fortinet Exam Dumps

fortinet nse4_fgt-6.4 dumps (pdf + vce)

fortinet nse4_fgt-6.2 dumps (pdf + vce)

fortinet nse5_faz-6.4 dumps (pdf + vce)

fortinet nse5_faz-6.2 dumps (pdf + vce)

fortinet nse5_fct-6.2 dumps (pdf + vce)

fortinet nse5_fmg-6.4 dumps (pdf + vce)

fortinet nse5_fmg-6.2 dumps (pdf + vce)

fortinet nse6_fml-6.2 dumps (pdf + vce)

fortinet nse6_fnc-8.5 dumps (pdf + vce)

fortinet nse7_efw-6.4 dumps (pdf + vce)

fortinet nse7_efw-6.2 dumps (pdf + vce)

fortinet nse7_sac-6.2 dumps (pdf + vce)

fortinet nse7_sdw-6.4 dumps (pdf + vce)

fortinet nse8_811 dumps (pdf + vce)

Valid Microsoft DP-200 questions shared by Pass4itsure for helping to pass the Microsoft DP-200 exam! Get the newest Pass4itsure Microsoft DP-200 exam dumps with VCE and PDF here: https://www.pass4itsure.com/dp-200.html (227 Q&As Dumps).

[Free PDF] Microsoft DP-200 pdf Q&As https://drive.google.com/file/d/1MsPABHzc7aMSKZ_rHLt5zWq4rkiX8b3C/view?usp=sharing

Suitable for DP-200 complete Microsoft learning pathway

The content is rich and diverse, and learning will not become boring. You can learn in multiple ways through the Microsoft DP-200 exam.

- Download

- Answer practice questions, the actual Microsoft DP-200 test

Microsoft DP-200 Implementing an Azure Data Solution

Free Microsoft DP-200 dumps download

[PDF] Free Microsoft DP-200 dumps pdf download https://drive.google.com/file/d/1MsPABHzc7aMSKZ_rHLt5zWq4rkiX8b3C/view?usp=sharing

Pass4itsure offers the latest Microsoft DP-200 practice test free of charge 1-13

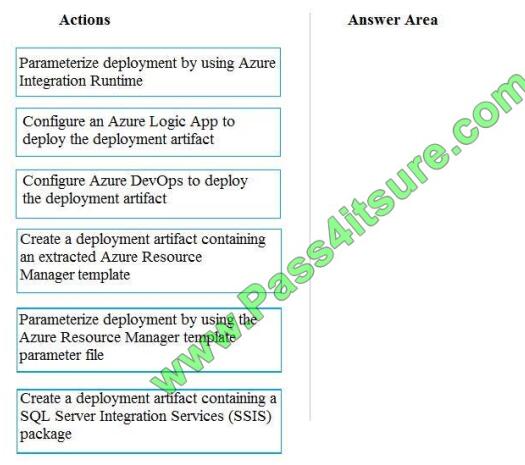

QUESTION 1

You need to ensure that phone-based polling data can be analyzed in the PollingData database.

Which three actions should you perform in sequence? To answer, move the appropriate actions from the list of actions

to the answer are and arrange them in the correct order.

Select and Place:

Correct Answer:

Explanation/Reference:

All deployments must be performed by using Azure DevOps. Deployments must use templates used in multiple

environments No credentials or secrets should be used during deployments

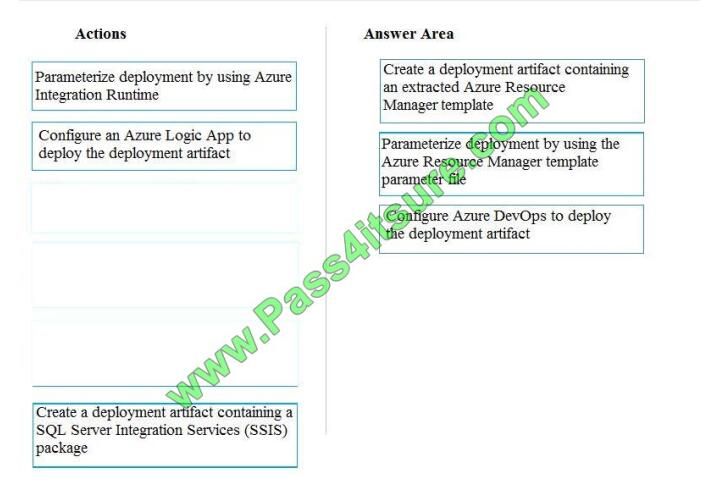

QUESTION 2

You are a data architect. The data engineering team needs to configure a synchronization of data between an onpremises Microsoft SQL Server database to Azure SQL Database.

Ad-hoc and reporting queries are being overutilized the on-premises production instance. The synchronization process

must:

Perform an initial data synchronization to Azure SQL Database with minimal downtime

Perform bi-directional data synchronization after initial synchronization

You need to implement this synchronization solution.

Which synchronization method should you use?

A. transactional replication

B. Data Migration Assistant (DMA)

C. backup and restore

D. SQL Server Agent job

E. Azure SQL Data Sync

Correct Answer: E

SQL Data Sync is a service built on Azure SQL Database that lets you synchronize the data you select bi-directionally

across multiple SQL databases and SQL Server instances.

With Data Sync, you can keep data synchronized between your on-premises databases and Azure SQL databases to

enable hybrid applications.

Compare Data Sync with Transactional Replication

References: https://docs.microsoft.com/en-us/azure/sql-database/sql-database-sync-data

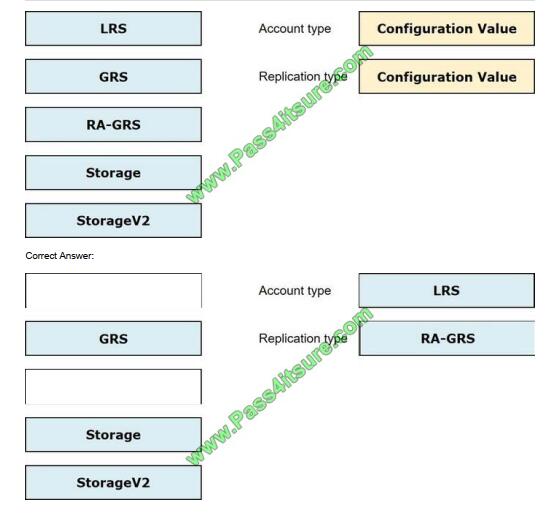

QUESTION 3

You need to provision the polling data storage account.

How should you configure the storage account? To answer, drag the appropriate Configuration Value to the correct

Setting. Each Configuration Value may be used once, more than once, or not at all. You may need to drag the split bar

between panes or scroll to view content.

NOTE: Each correct selection is worth one point.

Select and Place:

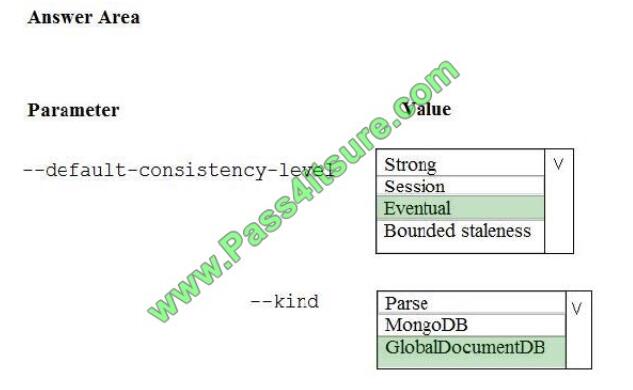

QUESTION 4

A company is planning to use Microsoft Azure Cosmos DB as the data store for an application. You have the following

Azure CLI command:

az cosmosdb create –

Correct Answer:

Box 1: Eventual

With Azure Cosmos DB, developers can choose from five well-defined consistency models on the consistency

spectrum. From strongest to more relaxed, the models include strong, bounded staleness, session, consistent prefix,

and eventual

consistency.

The following image shows the different consistency levels as a spectrum.

Box 2: GlobalDocumentDB

Select Core(SQL) to create a document database and query by using SQL syntax.

Note: The API determines the type of account to create. Azure Cosmos DB provides five APIs: Core(SQL) and

MongoDB for document databases, Gremlin for graph databases, Azure Table, and Cassandra.

References:

https://docs.microsoft.com/en-us/azure/cosmos-db/consistency-levels

https://docs.microsoft.com/en-us/azure/cosmos-db/create-sql-api-dotnet

QUESTION 5

What should you implement to optimize SQL Database for Race Central to meet the technical requirements?

A. the sp_updatestored procedure

B. automatic tuning

C. Query Store

D. the dbcc checkdbcommand

Correct Answer: A

Scenario: The query performance of Race Central must be stable, and the administrative time it takes to perform

optimizations must be minimized.

sp_update updates query optimization statistics on a table or indexed view. By default, the query optimizer already

updates statistics as necessary to improve the query plan; in some cases you can improve query performance by using

UPDATE STATISTICS or the stored procedure sp_updatestats to update statistics more frequently than the default

updates.

Incorrect Answers:

D: dbcc checkdchecks the logical and physical integrity of all the objects in the specified database

QUESTION 6

A company uses Azure SQL Database to store sales transaction data. Field sales employees need an offline copy of

the database that includes last year\\’s sales on their laptops when there is no internet connection available.

You need to create the offline export copy.

Which three options can you use? Each correct answer presents a complete solution.

NOTE: Each correct selection is worth one point.

A. Export to a BACPAC file by using Azure Cloud Shell, and save the file to an Azure storage account

B. Export to a BACPAC file by using SQL Server Management Studio. Save the file to an Azure storage account

C. Export to a BACPAC file by using the Azure portal

D. Export to a BACPAC file by using Azure PowerShell and save the file locally

E. Export to a BACPAC file by using the SqlPackage utility

Correct Answer: BCE

You can export to a BACPAC file using the Azure portal.

You can export to a BACPAC file using SQL Server Management Studio (SSMS). The newest versions of SQL Server

Management Studio provide a wizard to export an Azure SQL database to a BACPAC file.

You can export to a BACPAC file using the SQLPackage utility.

Incorrect Answers:

D: You can export to a BACPAC file using PowerShell. Use the New-AzSqlDatabaseExport cmdlet to submit an export

database request to the Azure SQL Database service. Depending on the size of your database, the export operation

may take some time to complete. However, the file is not stored locally.

References: https://docs.microsoft.com/en-us/azure/sql-database/sql-database-export

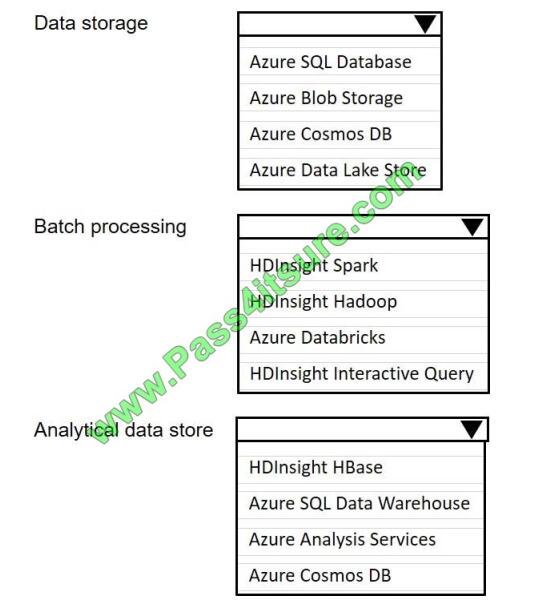

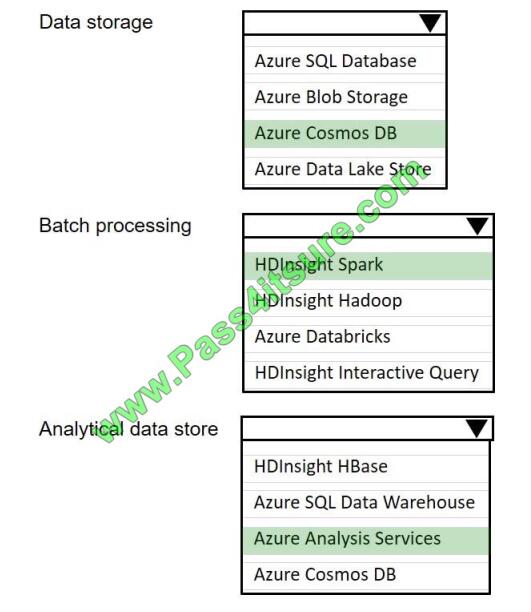

QUESTION 7

You are developing a solution using a Lambda architecture on Microsoft Azure.

The data at test layer must meet the following requirements:

Data storage:

-Serve as a repository (or high volumes of large files in various formats.

-Implement optimized storage for big data analytics workloads.

-Ensure that data can be organized using a hierarchical structure. Batch processing:

-Use a managed solution for in-memory computation processing.

-Natively support Scala, Python, and R programming languages.

-Provide the ability to resize and terminate the cluster automatically. Analytical data store:

-Support parallel processing.

-Use columnar storage.

-Support SQL-based languages. You need to identify the correct technologies to build the Lambda architecture. Which

technologies should you use? To answer, select the appropriate options in the answer area NOTE: Each correct

selection is worth one point.

Hot Area:

Correct Answer:

QUESTION 8

You have an Azure data solution that contains an Azure SQL data warehouse named DW1.

Several users execute adhoc queries to DW1 concurrently.

You regularly perform automated data loads to DW1.

You need to ensure that the automated data loads have enough memory available to complete quickly and successfully

when the adhoc queries run

What should you do?

A. Hash distribute the large fact tables in DW1 before performing the automated data loads.

B. Assign a larger resource class to the automated data load queries.

C. Create sampled statistics for every column in each table of DW1.

D. Assign a smaller resource class to the automated data load queries.

Correct Answer: B

To ensure the loading user has enough memory to achieve maximum compression rates, use loading users that are a

member of a medium or large resource class.

References: https://docs.microsoft.com/en-us/azure/sql-data-warehouse/guidance-for-loading-data

QUESTION 9

You plan to create an Azure Databricks workspace that has a tiered structure. The workspace will contain the following

three workloads:

A workload for data engineers who will use Python and SQL

A workload for jobs that will run notebooks that use Python, Spark, Scala, and SQL

A workload that data scientists will use to perform ad hoc analysis in Scala and R

The enterprise architecture team at your company identifies the following standards for Databricks environments:

The data engineers must share a cluster.

The job cluster will be managed by using a request process whereby data scientists and data engineers provide

packaged notebooks for deployment to the cluster.

All the data scientists must be assigned their own cluster that terminates automatically after 120 minutes of inactivity.

Currently, there are three data scientists.

You need to create the Databrick clusters for the workloads.

Solution: You create a Standard cluster for each data scientist, a High Concurrency cluster for the data engineers, and a

High Concurrency cluster for the jobs.

Does this meet the goal?

A. Yes

B. No

Correct Answer: A

We need a High Concurrency cluster for the data engineers and the jobs.

Note:

Standard clusters are recommended for a single user. Standard can run workloads developed in any language: Python,

R, Scala, and SQL.

A high concurrency cluster is a managed cloud resource. The key benefits of high concurrency clusters are that they

provide Apache Spark-native fine-grained sharing for maximum resource utilization and minimum query latencies.

References:

https://docs.azuredatabricks.net/clusters/configure.html

QUESTION 10

You have an Azure Storage account that contains 100 GB of files. The files contain text and numerical values. 75% of

the rows contain description data that has an average length of 1.1 MB.

You plan to copy the data from the storage account to an Azure SQL data warehouse.

You need to prepare the files to ensure that the data copies quickly.

Solution: You copy the files to a table that has a columnstore index.

Does this meet the goal?

A. Yes

B. No

Correct Answer: B

Instead modify the files to ensure that each row is less than 1 MB.

References: https://docs.microsoft.com/en-us/azure/sql-data-warehouse/guidance-for-loading-data

QUESTION 11

You manage a solution that uses Azure HDInsight clusters.

You need to implement a solution to monitor cluster performance and status.

Which technology should you use?

A. Azure HDInsight .NET SDK

B. Azure HDInsight REST API

C. Ambari REST API

D. Azure Log Analytics

E. Ambari Web UI

Correct Answer: E

Ambari is the recommended tool for monitoring utilization across the whole cluster. The Ambari dashboard shows easily

glanceable widgets that display metrics such as CPU, network, YARN memory, and HDFS disk usage. The specific

metrics shown depend on cluster type. The “Hosts” tab shows metrics for individual nodes so you can ensure the load

on your cluster is evenly distributed.

The Apache Ambari project is aimed at making Hadoop management simpler by developing software for provisioning,

managing, and monitoring Apache Hadoop clusters. Ambari provides an intuitive, easy-to-use Hadoop management

web UI backed by its RESTful APIs.

References: https://azure.microsoft.com/en-us/blog/monitoring-on-hdinsight-part-1-an-overview/

https://ambari.apache.org/

QUESTION 12

You plan to implement an Azure Cosmos DB database that will write 100,000 JSON every 24 hours. The database will

be replicated to three regions. Only one region will be writable.

You need to select a consistency level for the database to meet the following requirements:

Guarantee monotonic reads and writes within a session.

Provide the fastest throughput.

Provide the lowest latency.

Which consistency level should you select?

A. Strong

B. Bounded Staleness

C. Eventual

D. Session

E. Consistent Prefix

Correct Answer: D

Session: Within a single client session reads are guaranteed to honor the consistent-prefix (assuming a single “writer”

session), monotonic reads, monotonic writes, read-your-writes, and write-follows-reads guarantees. Clients outside of

the session performing writes will see eventual consistency.

References: https://docs.microsoft.com/en-us/azure/cosmos-db/consistency-levels

QUESTION 13

A company builds an application to allow developers to share and compare code. The conversations, code snippets,

and links shared by people in the application are stored in a Microsoft Azure SQL Database instance. The application

allows for searches of historical conversations and code snippets.

When users share code snippets, the code snippet is compared against previously share code snippets by using a

combination of Transact-SQL functions including SUBSTRING, FIRST_VALUE, and SQRT. If a match is found, a link to

the match is added to the conversation.

Customers report the following issues: Delays occur during live conversations A delay occurs before matching links

appear after code snippets are added to conversations

You need to resolve the performance issues.

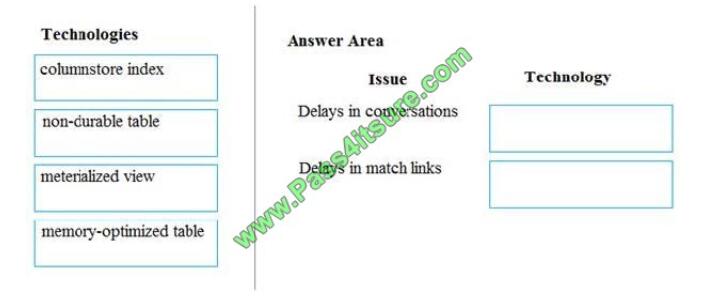

Which technologies should you use? To answer, drag the appropriate technologies to the correct issues. Each

technology may be used once, more than once, or not at all. You may need to drag the split bar between panes or scroll

to view content.

NOTE: Each correct selection is worth one point.

Select and Place:

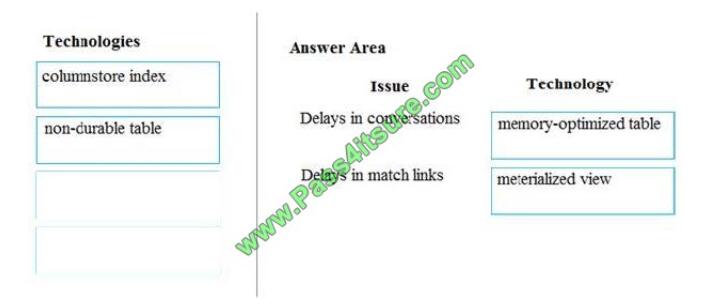

Correct Answer:

Box 1: memory-optimized table

In-Memory OLTP can provide great performance benefits for transaction processing, data ingestion, and transient data

scenarios.

Box 2: materialized view

To support efficient querying, a common solution is to generate, in advance, a view that materializes the data in a format

suited to the required results set. The Materialized View pattern describes generating prepopulated views of data in

environments where the source data isn\\’t in a suitable format for querying, where generating a suitable query is

difficult, or where query performance is poor due to the nature of the data or the data store.

These materialized views, which only contain data required by a query, allow applications to quickly obtain the

information they need. In addition to joining tables or combining data entities, materialized views can include the current

values of

calculated columns or data items, the results of combining values or executing transformations on the data items, and

values specified as part of the query. A materialized view can even be optimized for just a single query.

References:

https://docs.microsoft.com/en-us/azure/architecture/patterns/materialized-view

Summarize:

[Q1-Q13] Free Microsoft DP-200 pdf download https://drive.google.com/file/d/1MsPABHzc7aMSKZ_rHLt5zWq4rkiX8b3C/view?usp=sharing

Share all the resources: Latest Microsoft DP-200 practice questions, latest Microsoft DP-200 pdf dumps. The latest updated Microsoft DP-200 dumps https://www.pass4itsure.com/dp-200.html Study hard and practices a lot. This will help you prepare for the Microsoft DP-200 exam. Good luck!

Written by Ralph K. Merritt

We are here to help you study for Cisco certification exams. We know that the Cisco series (CCNP, CCDE, CCIE, CCNA, DevNet, Special and other certification exams are becoming more and more popular, and many people need them. In this era full of challenges and opportunities, we are committed to providing candidates with the most comprehensive and comprehensive Accurate exam preparation resources help them successfully pass the exam and realize their career dreams. The Exampass blog we established is based on the Pass4itsure Cisco exam dump platform and is dedicated to collecting the latest exam resources and conducting detailed classification. We know that the most troublesome thing for candidates during the preparation process is often the massive amount of learning materials and information screening. Therefore, we have prepared the most valuable preparation materials for candidates to help them prepare more efficiently. With our rich experience and deep accumulation in Cisco certification, we provide you with the latest PDF information and the latest exam questions. These materials not only include the key points and difficulties of the exam, but are also equipped with detailed analysis and question-answering techniques, allowing candidates to deeply understand the exam content and master how to answer questions. Our ultimate goal is to help you study for various Cisco certification exams, so that you can avoid detours in the preparation process and get twice the result with half the effort. We believe that through our efforts and professional guidance, you will be able to easily cope with exam challenges, achieve excellent results, and achieve both personal and professional improvement. In your future career, you will be more competitive and have broader development space because of your Cisco certification.

Categories

2025 Microsoft Top 20 Certification Materials

- Microsoft Azure Administrator –> az-104 dumps

- Microsoft Azure Fundamentals –> az-900 dumps

- Data Engineering on Microsoft Azure –> dp-203 dumps

- Developing Solutions for Microsoft Azure –> az-204 dumps

- Microsoft Power Platform Developer –> pl-400 dumps

- Designing and Implementing a Microsoft Azure AI Solution –> ai-102 dumps

- Microsoft Power BI Data Analyst –> pl-300 dumps

- Designing and Implementing Microsoft DevOps Solutions –> az-400 dumps

- Microsoft Azure Security Technologies –> az-500 dumps

- Microsoft Cybersecurity Architect –> sc-100 dumps

- Microsoft Dynamics 365 Fundamentals Customer Engagement Apps (CRM) –> mb-910 dumps

- Microsoft Dynamics 365 Fundamentals Finance and Operations Apps (ERP) –> mb-920 dumps

- Microsoft Azure Data Fundamentals –> dp-900 dumps

- Microsoft 365 Fundamentals –> ms-900 dumps

- Microsoft Security Compliance and Identity Fundamentals –> sc-900 dumps

- Microsoft Azure AI Fundamentals –> ai-900 dumps

- Microsoft Dynamics 365: Finance and Operations Apps Solution Architect –> mb-700 dumps

- Microsoft 365 Certified: Enterprise Administrator Expert –> ms-102 dumps

- Microsoft 365 Certified: Collaboration Communications Systems Engineer Associate –> ms-721 dumps

- Endpoint Administrator Associate –> md-102 dumps