Cisco Certification Exam Prep Materials

Cisco CCNA Exam Prep Material Download

Cisco CCT Exam Prep Material Download

- Cisco 010-151 Dumps PDF

- Cisco 100-490 Dumps PDF

- Cisco 100-890 Dumps PDF

- Tips: Beginning February 10, the CCT Certification 500-150 FLDTEC v1.0 exam will replace the 100-490, 010-151, and 100-890 exams.

Cisco CyberOps Exam Prep Material Download

Cisco DevNet Exam Prep Material Download

Cisco CCNP Exam Prep Material Download

- Cisco 300-410 Dumps PDF

- Cisco 300-415 Dumps PDF

- Cisco 300-420 Dumps PDF

- Cisco 300-425 Dumps PDF

- Cisco 300-430 Dumps PDF

- Cisco 300-435 Dumps PDF

- Cisco 300-440 Dumps PDF

- Cisco 300-510 Dumps PDF

- Cisco 300-515 Dumps PDF

- Cisco 300-535 Dumps PDF

- Cisco 300-610 Dumps PDF

- Cisco 300-615 Dumps PDF

- Cisco 300-620 Dumps PDF

- Cisco 300-630 Dumps PDF

- Cisco 300-635 Dumps PDF

- Cisco 300-710 Dumps PDF

- Cisco 300-715 Dumps PDF

- Cisco 300-720 Dumps PDF

- Cisco 300-725 Dumps PDF

- Cisco 300-730 Dumps PDF

- Cisco 300-735 Dumps PDF

- Cisco 300-810 Dumps PDF

- Cisco 300-815 Dumps PDF

- Cisco 300-820 Dumps PDF

- Cisco 300-835 Dumps PDF

Cisco CCIE Exam Prep Material Download

- Cisco 350-401 Dumps PDF

- Cisco 350-501 Dumps PDF

- Cisco 350-601 Dumps PDF

- Cisco 350-701 Dumps PDF

- Cisco 350-801 Dumps PDF

Cisco CCDE Exam Prep Material Download

Cisco Other Exam Prep Material Download

- Cisco 500-052 Dumps PDF

- Cisco 500-210 Dumps PDF

- Cisco 500-220 Dumps PDF

- Cisco 500-420 Dumps PDF

- Cisco 500-442 Dumps PDF

- Cisco 500-444 Dumps PDF

- Cisco 500-470 Dumps PDF

- Cisco 500-490 Dumps PDF

- Cisco 500-560 Dumps PDF

- Cisco 500-710 Dumps PDF

- Cisco 700-150 Dumps PDF

- Cisco 700-750 Dumps PDF

- Cisco 700-760 Dumps PDF

- Cisco 700-765 Dumps PDF

- Cisco 700-805 Dumps PDF

- Cisco 700-821 Dumps PDF

- Cisco 700-826 Dumps PDF

- Cisco 700-846 Dumps PDF

- Cisco 700-905 Dumps PDF

- Cisco 820-605 Dumps PDF

Fortinet Exam Dumps

fortinet nse4_fgt-6.4 dumps (pdf + vce)

fortinet nse4_fgt-6.2 dumps (pdf + vce)

fortinet nse5_faz-6.4 dumps (pdf + vce)

fortinet nse5_faz-6.2 dumps (pdf + vce)

fortinet nse5_fct-6.2 dumps (pdf + vce)

fortinet nse5_fmg-6.4 dumps (pdf + vce)

fortinet nse5_fmg-6.2 dumps (pdf + vce)

fortinet nse6_fml-6.2 dumps (pdf + vce)

fortinet nse6_fnc-8.5 dumps (pdf + vce)

fortinet nse7_efw-6.4 dumps (pdf + vce)

fortinet nse7_efw-6.2 dumps (pdf + vce)

fortinet nse7_sac-6.2 dumps (pdf + vce)

fortinet nse7_sdw-6.4 dumps (pdf + vce)

fortinet nse8_811 dumps (pdf + vce)

Where do I find a DP-201 PDF or any dump to download? Here you can easily get the latest Microsoft Certifications DP-201 exam dumps and DP-201 pdf! We’ve compiled the latest Microsoft DP-201 exam questions and answers to help you save most of your time. Microsoft DP-201 exam “Designing an Azure Data Solution” https://www.pass4itsure.com/dp-201.html (Q&As:74). All exam dump! Guaranteed to pass for the first time!

Microsoft Certifications DP-201 Exam pdf

[PDF] Free Microsoft DP-201 pdf dumps download from Google Drive: https://drive.google.com/open?id=1voG3cYhKFklJuG3ZSghQ98UaUGLlj3MN

Related Microsoft Certifications Exam pdf

[PDF] Free Microsoft DP-200 pdf dumps download from Google Drive: https://drive.google.com/open?id=1lJNE54_9AAyU9kzPI_8NR-PPFqNYM7ys

[PDF] Free Microsoft MD-100 pdf dumps download from Google Drive: https://drive.google.com/open?id=1s1Iy9Fx7esWTBKip_3ZweRQP2xWQIPoy

Microsoft exam certification information

Exam DP-201: Designing an Azure Data Solution – Microsoft: https://www.microsoft.com/en-us/learning/exam-dp-201.aspx

Candidates for this exam are Microsoft Azure data engineers who collaborate with business stakeholders to identify and meet the data requirements to design data solutions that use Azure data services.

Azure data engineers are responsible for data-related tasks that include designing Azure data storage solutions that use relational and non-relational data stores, batch and real-time data processing solutions, and data security and compliance solutions.

Skills measured

- Design Azure data storage solutions (40-45%)

- Design data processing solutions (25-30%)

- Design for data security and compliance (25-30%)

Microsoft Certified: Azure Data Engineer Associate:https://www.microsoft.com/en-us/learning/azure-data-engineer.aspx

Azure Data Engineers design and implement the management, monitoring, security, and privacy of data using the full stack of Azure data services to satisfy business needs. Required exams: Exam DP-200. related blog: http://www.mlfacets.com/2019/05/13/latest-microsoft-other-certification-dp-200-exam-dumps-shared-free-of-charge/

Microsoft Certifications DP-201 Online Exam Practice Questions

QUESTION 1

You need to design the disaster recovery solution for customer sales data analytics.

Which three actions should you recommend? Each correct answer presents part of the solution.

NOTE: Each correct selection is worth one point.

A. Provision multiple Azure Databricks workspaces in separate Azure regions.

B. Migrate users, notebooks, and cluster configurations from one workspace to another in the same region.

C. Use zone redundant storage.

D. Migrate users, notebooks, and cluster configurations from one region to another.

E. Use Geo-redundant storage.

F. Provision a second Azure Databricks workspace in the same region.

Correct Answer: ADE

Scenario: The analytics solution for customer sales data must be available during a regional outage.

To create your own regional disaster recovery topology for databricks, follow these requirements:

Provision multiple Azure Databricks workspaces in separate Azure regions

Use Geo-redundant storage.

Once the secondary region is created, you must migrate the users, user folders, notebooks, cluster configuration, jobs

configuration, libraries, storage, init scripts, and reconfigure access control.

Note: Geo-redundant storage (GRS) is designed to provide at least 99.99999999999999% (16 9\\’s) durability of objects

over a given year by replicating your data to a secondary region that is hundreds of miles away from the primary region.

If

your storage account has GRS enabled, then your data is durable even in the case of a complete regional outage or a

disaster in which the primary region isn\\’t recoverable.

References:

https://docs.microsoft.com/en-us/azure/storage/common/storage-redundancy-grs

QUESTION 2

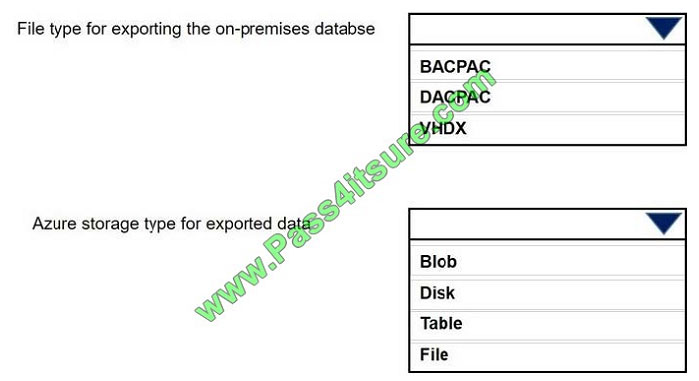

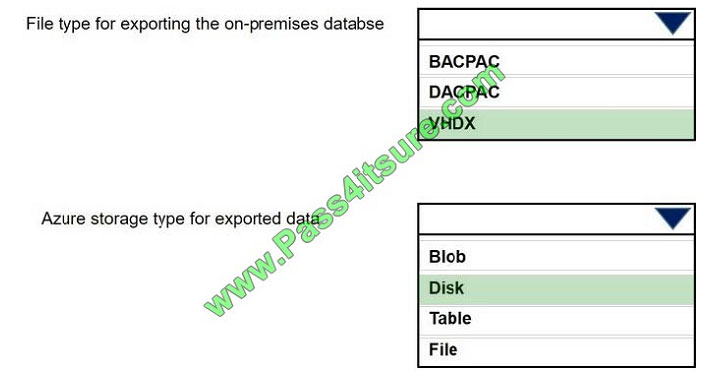

You manage an on-premises server named Server1 that has a database named Database1. The company purchases a

new application that can access data from Azure SQL Database.

You recommend a solution to migrate Database1 to an Azure SQL Database instance.

What should you recommend? To answer, select the appropriate configuration in the answer area.

NOTE: Each correct selection is worth one point.

Hot Area:

Correct Answer:

QUESTION 3

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains

a unique solution that might meet the stated goals. Some question sets might have more than one correct solution,

while

others might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not

appear in the review screen.

A company is developing a solution to manage inventory data for a group of automotive repair shops. The solution will

use Azure SQL Data Warehouse as the data store.

Shops will upload data every 10 days.

Data corruption checks must run each time data is uploaded. If corruption is detected, the corrupted data must be

removed.

You need to ensure that upload processes and data corruption checks do not impact reporting and analytics processes

that use the data warehouse.

Proposed solution: Configure database-level auditing in Azure SQL Data Warehouse and set retention to 10 days.

Does the solution meet the goal?

A. Yes

B. No

Correct Answer: B

Instead, create a user-defined restore point before data is uploaded. Delete the restore point after data corruption

checks complete.

References: https://docs.microsoft.com/en-us/azure/sql-data-warehouse/backup-and-restore

QUESTION 4

A company has many applications. Each application is supported by separate on-premises databases.

You must migrate the databases to Azure SQL Database. You have the following requirements:

Organize databases into groups based on database usage.

Define the maximum resource limit available for each group of databases.

You need to recommend technologies to scale the databases to support expected increases in demand.

What should you recommend?

A. Read scale-out

B. Managed instances

C. Elastic pools

D. Database sharding

Correct Answer: C

SQL Database elastic pools are a simple, cost-effective solution for managing and scaling multiple databases that have

varying and unpredictable usage demands. The databases in an elastic pool are on a single Azure SQL Database

server

and share a set number of resources at a set price.

You can configure resources for the pool based either on the DTU-based purchasing model or the vCore-based

purchasing model.

Incorrect Answers:

D: Database sharding is a type of horizontal partitioning that splits large databases into smaller components, which are

faster and easier to manage.

References: https://docs.microsoft.com/en-us/azure/sql-database/sql-database-elastic-pool

QUESTION 5

You need to recommend a backup strategy for CONT_SQL1 and CONT_SQL2. What should you recommend?

A. Use AzCopy and store the data in Azure.

B. Configure Azure SQL Database long-term retention for all databases.

C. Configure Accelerated Database Recovery.

D. Use DWLoader.

Correct Answer: B

Scenario: The database backups have regulatory purposes and must be retained for seven years.

QUESTION 6

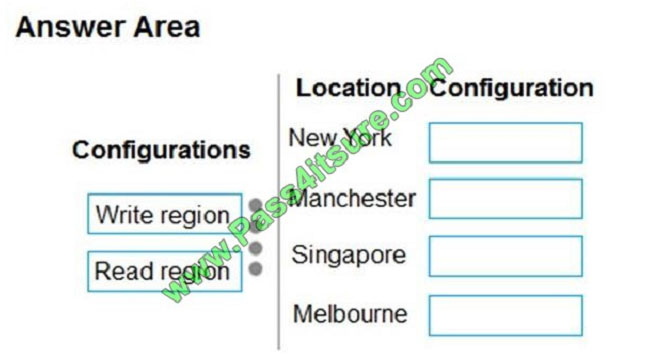

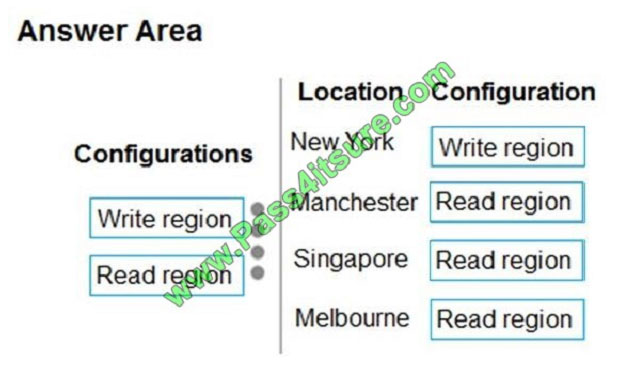

You need to design the image processing solution to meet the optimization requirements for image tag data.

What should you configure? To answer, drag the appropriate setting to the correct drop targets.

Each source may be used once, more than once, or not at all. You may need to drag the split bar between panes or

scroll to view content.

NOTE: Each correct selection is worth one point.

Select and Place:

Correct Answer:

Tagging data must be uploaded to the cloud from the New York office location.

Tagging data must be replicated to regions that are geographically close to company office locations.

QUESTION 7

You are designing an Azure Databricks cluster that runs user-defined local processes. You need to recommend a

cluster configuration that meets the following requirements: Minimize query latency.

-Reduce overall costs.

–

Maximize the number of users that can run queries on the cluster at the same time. Which cluster type should you

recommend?

A.

Standard with Autoscaling

B.

High Concurrency with Auto Termination

C.

High Concurrency with Autoscaling

D.

Standard with Auto Termination

Correct Answer: A

QUESTION 8

You have an on-premises MySQL database that is 800 GB in size.

You need to migrate a MySQL database to Azure Database for MySQL. You must minimize service interruption to live

sites or applications that use the database.

What should you recommend?

A. Azure Database Migration Service

B. Dump and restore

C. Import and export

D. MySQL Workbench

Correct Answer: A

You can perform MySQL migrations to Azure Database for MySQL with minimal downtime by using the newly

introduced continuous sync capability for the Azure Database Migration Service (DMS). This functionality limits the

amount of downtime that is incurred by the application.

References: https://docs.microsoft.com/en-us/azure/mysql/howto-migrate-online

QUESTION 9

You plan to deploy an Azure SQL Database instance to support an application. You plan to use the DTU-based

purchasing model.

Backups of the database must be available for 30 days and point-in-time restoration must be possible.

You need to recommend a backup and recovery policy.

What are two possible ways to achieve the goal? Each correct answer presents a complete solution.

NOTE: Each correct selection is worth one point.

A. Use the Premium tier and the default backup retention policy.

B. Use the Basic tier and the default backup retention policy.

C. Use the Standard tier and the default backup retention policy.

D. Use the Standard tier and configure a long-term backup retention policy.

E. Use the Premium tier and configure a long-term backup retention policy.

Correct Answer: DE

The default retention period for a database created using the DTU-based purchasing model depends on the service

tier:

Basic service tier is 1 week.

Standard service tier is 5 weeks.

Premium service tier is 5 weeks.

Incorrect Answers:

B: Basic tier only allows restore points within 7 days.

References: https://docs.microsoft.com/en-us/azure/sql-database/sql-database-long-term-retention

QUESTION 10

You are designing a data processing solution that will implement the lambda architecture pattern. The solution will use

Spark running on HDInsight for data processing.

You need to recommend a data storage technology for the solution.

Which two technologies should you recommend? Each correct answer presents a complete solution.

NOTE: Each correct selection is worth one point.

A. Azure Cosmos DB

B. Azure Service Bus

C. Azure Storage Queue

D. Apache Cassandra

E. Kafka HDInsight

Correct Answer: A

To implement a lambda architecture on Azure, you can combine the following technologies to accelerate real-time big

data analytics: Azure Cosmos DB, the industry\\’s first globally distributed, multi-model database service. Apache Spark

for Azure HDInsight, a processing framework that runs large-scale data analytics applications Azure Cosmos DB

change feed, which streams new data to the batch layer for HDInsight to process The Spark to Azure Cosmos DB

Connector

E: You can use Apache Spark to stream data into or out of Apache Kafka on HDInsight using DStreams.

References: https://docs.microsoft.com/en-us/azure/cosmos-db/lambda-architecture

QUESTION 11

You are designing an Azure Data Factory pipeline for processing data. The pipeline will process data that is stored in

general-purpose standard Azure storage.

You need to ensure that the compute environment is created on-demand and removed when the process is completed.

Which type of activity should you recommend?

A. Databricks Python activity

B. Data Lake Analytics U-SQL activity

C. HDInsight Pig activity

D. Databricks Jar activity

Correct Answer: C

The HDInsight Pig activity in a Data Factory pipeline executes Pig queries on your own or on-demand HDInsight

cluster.

References: https://docs.microsoft.com/en-us/azure/data-factory/transform-data-using-hadoop-pig

QUESTION 12

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains

a unique solution that might meet the stated goals. Some question sets might have more than one correct solution,

while

others might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not

appear in the review screen.

A company is developing a solution to manage inventory data for a group of automotive repair shops. The solution will

use Azure SQL Data Warehouse as the data store.

Shops will upload data every 10 days.

Data corruption checks must run each time data is uploaded. If corruption is detected, the corrupted data must be

removed.

You need to ensure that upload processes and data corruption checks do not impact reporting and analytics processes

that use the data warehouse.

Proposed solution: Create a user-defined restore point before data is uploaded. Delete the restore point after data

corruption checks complete.

Does the solution meet the goal?

A. Yes

B. No

Correct Answer: A

User-Defined Restore Points This feature enables you to manually trigger snapshots to create restore points of your

data warehouse before and after large modifications. This capability ensures that restore points are logically consistent,

which provides additional data protection in case of any workload interruptions or user errors for quick recovery time.

Note: A data warehouse restore is a new data warehouse that is created from a restore point of an existing or deleted

data warehouse. Restoring your data warehouse is an essential part of any business continuity and disaster recovery

strategy because it re-creates your data after accidental corruption or deletion.

References: https://docs.microsoft.com/en-us/azure/sql-data-warehouse/backup-and-restore

QUESTION 13

A company stores data in multiple types of cloud-based databases.

You need to design a solution to consolidate data into a single relational database. Ingestion of data will occur at set

times each day.

What should you recommend?

A. SQL Server Migration Assistant

B. SQL Data Sync

C. Azure Data Factory

D. Azure Database Migration Service

E. Data Migration Assistant

Correct Answer: C

Share Pass4itsure discount codes for free

The benefits of Pass4itsure!

Pass4itsure offers the latest exam practice questions and answers free of charge! Update all exam questions throughout the year, with a number of professional exam experts! To make sure it works! Maximum pass rate, best value for money! Helps you pass the exam easily on your first attempt.

This maybe you’re interested

Summarize:

Get the full Microsoft Certifications DP-201 exam dump here: https://www.pass4itsure.com/dp-201.html (Q&As:74). Follow my blog and we regularly update the latest effective exam dumps to help you improve your skills!

Written by Ralph K. Merritt

We are here to help you study for Cisco certification exams. We know that the Cisco series (CCNP, CCDE, CCIE, CCNA, DevNet, Special and other certification exams are becoming more and more popular, and many people need them. In this era full of challenges and opportunities, we are committed to providing candidates with the most comprehensive and comprehensive Accurate exam preparation resources help them successfully pass the exam and realize their career dreams. The Exampass blog we established is based on the Pass4itsure Cisco exam dump platform and is dedicated to collecting the latest exam resources and conducting detailed classification. We know that the most troublesome thing for candidates during the preparation process is often the massive amount of learning materials and information screening. Therefore, we have prepared the most valuable preparation materials for candidates to help them prepare more efficiently. With our rich experience and deep accumulation in Cisco certification, we provide you with the latest PDF information and the latest exam questions. These materials not only include the key points and difficulties of the exam, but are also equipped with detailed analysis and question-answering techniques, allowing candidates to deeply understand the exam content and master how to answer questions. Our ultimate goal is to help you study for various Cisco certification exams, so that you can avoid detours in the preparation process and get twice the result with half the effort. We believe that through our efforts and professional guidance, you will be able to easily cope with exam challenges, achieve excellent results, and achieve both personal and professional improvement. In your future career, you will be more competitive and have broader development space because of your Cisco certification.

Categories

2025 Microsoft Top 20 Certification Materials

- Microsoft Azure Administrator –> az-104 dumps

- Microsoft Azure Fundamentals –> az-900 dumps

- Data Engineering on Microsoft Azure –> dp-203 dumps

- Developing Solutions for Microsoft Azure –> az-204 dumps

- Microsoft Power Platform Developer –> pl-400 dumps

- Designing and Implementing a Microsoft Azure AI Solution –> ai-102 dumps

- Microsoft Power BI Data Analyst –> pl-300 dumps

- Designing and Implementing Microsoft DevOps Solutions –> az-400 dumps

- Microsoft Azure Security Technologies –> az-500 dumps

- Microsoft Cybersecurity Architect –> sc-100 dumps

- Microsoft Dynamics 365 Fundamentals Customer Engagement Apps (CRM) –> mb-910 dumps

- Microsoft Dynamics 365 Fundamentals Finance and Operations Apps (ERP) –> mb-920 dumps

- Microsoft Azure Data Fundamentals –> dp-900 dumps

- Microsoft 365 Fundamentals –> ms-900 dumps

- Microsoft Security Compliance and Identity Fundamentals –> sc-900 dumps

- Microsoft Azure AI Fundamentals –> ai-900 dumps

- Microsoft Dynamics 365: Finance and Operations Apps Solution Architect –> mb-700 dumps

- Microsoft 365 Certified: Enterprise Administrator Expert –> ms-102 dumps

- Microsoft 365 Certified: Collaboration Communications Systems Engineer Associate –> ms-721 dumps

- Endpoint Administrator Associate –> md-102 dumps